About Me

I lead enterprise Gen AI/ML/LLM and Data platforms from strategy to production so teams move faster and customers see value sooner. I don't just architect platforms; I ship them with governance, reliability, and cost discipline.

I build cloud-native, petabyte-scale data platforms on AWS/Azure/GCP (Snowflake, Databricks, Firebolt) and operationalize GenAI (RAG, agents, LLMOps). My work spans Healthcare (Optum), Retail/CPG (Costco, Level10), Ad tech (Samsung Ads), Media (Condé Nast), and Cisco networking.

Outcomes

• $1B+ impact at Samsung Ads (unified data platform, ML personalization)

• $13B+ Cisco CX platform transition to AWS cloud-native architecture and global team modernization

• $100M+ value from an AI portfolio (agents, AI search); LLM/RAG helpdesk 35% faster, 98% CSAT

• 15 new logos (LTM), avg. ACV ~$700k; sales cycle −50%; POC 16→12 weeks

• +45% delivery velocity via Architecture CoE/ARB, reference architectures, and accelerators

How I Work

• Platforms as products (roadmaps, SLAs, chargeback/showback)

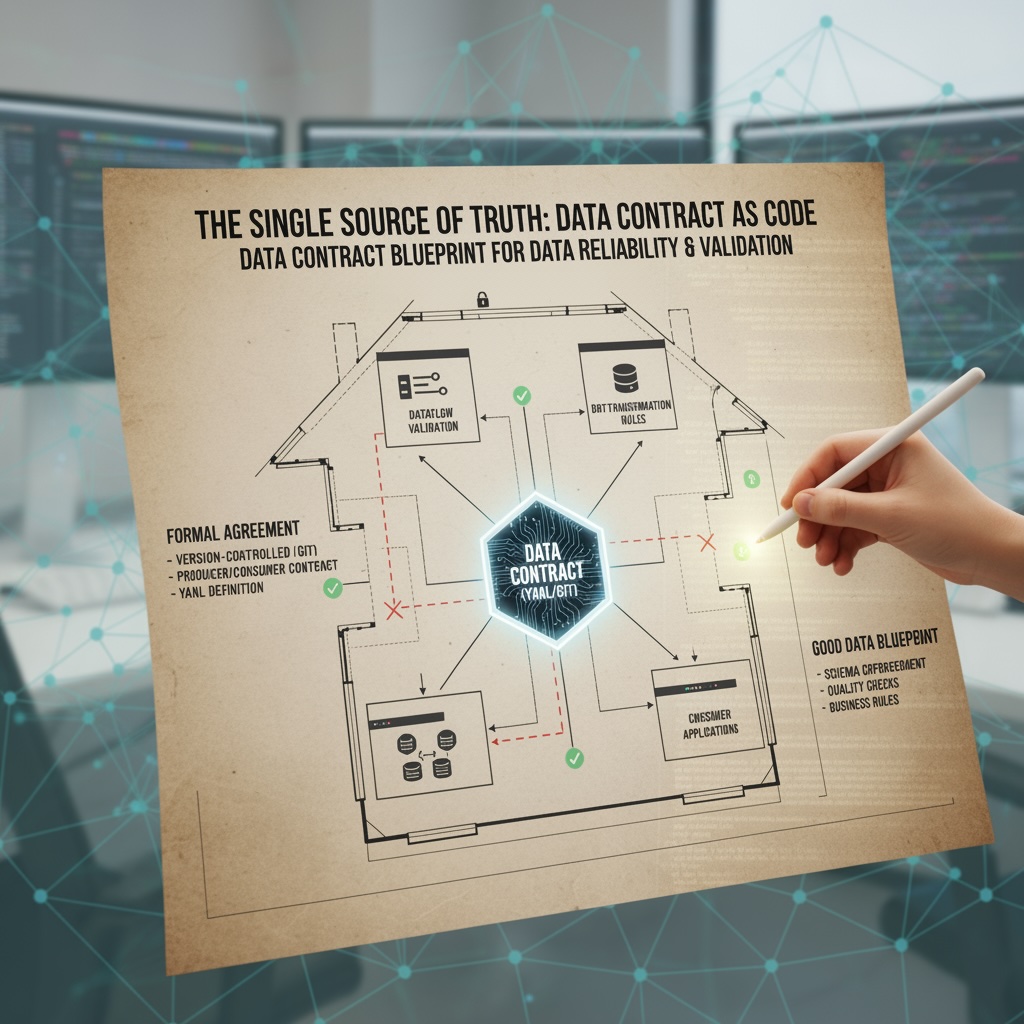

• Govern to go faster (privacy-by-design, data contracts, lineage, DQ SLAs)

• Automate the boring (DataOps/MLOps/LLMOps; repeatable patterns over one-offs)

• Partner smartly (co-sell with AWS/Azure/GCP, Snowflake/Databricks/Firebolt; advisor to DataStax, Elastic, Oracle)

• People first (grow onshore/offshore teams with clarity, ownership, coaching)

My Ethos

💡 Prioritize impact over outputs

💡 Design governance into systems

💡 Automate and standardize via DataOps/MLOps/LLMOps

💡 Move fast, adapt, and take action—progress over perfection

💡 Use AI when it adds real leverage—skip it when it doesn't

💡 Reuse proven patterns—clarity over novelty

💡 Build in privacy, security, and cost discipline

💡 Develop people and partners openly

Top Skills

Enterprise Architecture • Data Modernization • Generative AI • Large Language Models (LLM) • Interpersonal Leadership

Core Competencies

👔 Leadership • Team Building & Mentorship • IT & Operations • Strategy & Governance

🏗️ Architecture • Data Strategy & Governance • Modernization • Platform-as-Product • Data Products & Monetization

☁️ Platforms • Cloud Data Platforms (AWS, Azure, GCP, Snowflake, Databricks, Firebolt)

🤖 AI/ML & Analytics • Generative AI (GenAI) • Predictive & Prescriptive Analytics

🛡️ Data Management & Trust • MDM • Data Contracts • Data Quality & Integrity • Security & Privacy • DataOps • MLOps • LLMOps

My Technology Blogs 📝

The convergence of Generative AI and Web3 holds immense potential to revolutionize various sectors, including Decentralized Autonomous Organizations (DAOs), gaming and virtual worlds, and AI-generated NFTs and digital art. Generative AI and Web3 are two promising technologies that are rapidly evolving to reshape industries in novel ways. Generative AI focuses on creating new data, such as text, images, and music, while Web3 is the next generation of the internet, built on blockchain technology.

Explore the creation of an advanced document-based question-answering system using LangChain and Pinecone. By capitalizing on the latest advancements in large language models (LLMs) like OpenAI GPT-4, we'll construct a document question-answer system with the LangChain and Pinecone.

A Global Data Strategy framework serves as a guiding light for the organization's data strategy, helping it to stay on course and navigate towards its goals. It is a reliable tool that helps organizations navigate their way through the complex terrain of data strategy, with the pyramid structure representing a solid foundation and the mission framework serving as a clear guide towards achieving their goals.

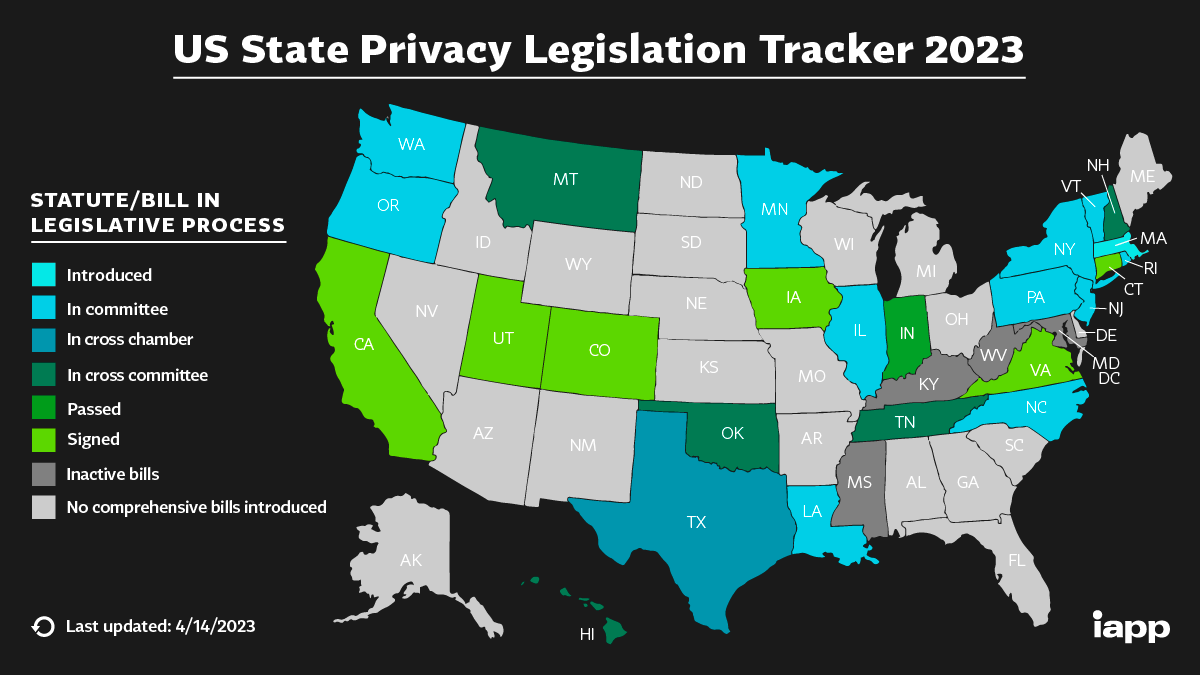

A Global Data Strategy centered around a Privacy by Design Methodology means that the organization's data strategy is built with privacy in mind from the start, rather than added as an afterthought.

The DevSecOps pipeline is a methodology that emphasizes the integration of security practices into every stage of the software development lifecycle (SDLC). Identify the list of tools that provide advanced security features and functionalities to help organizations with a higher focus on security to enhance their overall security posture, detect vulnerabilities, and ensure the robustness of their applications and infrastructure.

Meeting fatigue is real, and when calendars become a solid block of meetings, it can be challenging to find time to complete essential tasks. Using the framework will help maximize productivity and minimize meeting fatigue.

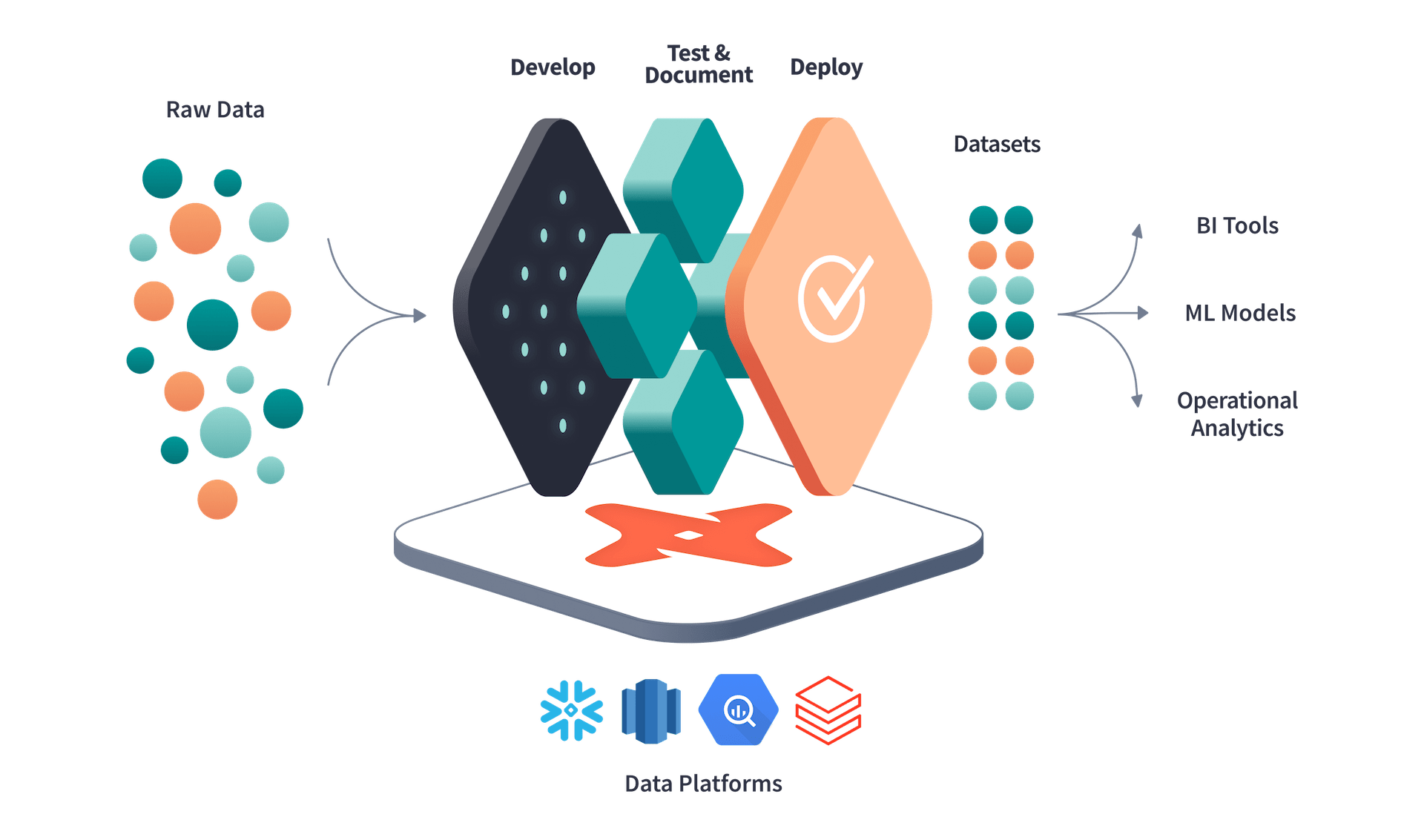

A database build tool is a software tool designed to manage the creation and modification of database schema and objects, and to automate the deployment of those changes to different environments. DBT vs Flyway

Avro and JSON are both data serialization formats used in distributed computing systems, but they have several differences. Avro is a binary format that is more compact and efficient than JSON, making it more suitable for use in distributed systems. It also supports schema evolution and is language independent. On the other hand, JSON is a text-based format that is more human-readable than Avro, and it is more widely used because it is supported by many programming languages and frameworks.

What are the new table types in snowflake in 2023? GA, PuPr and PrPr

Hashing is a technique used to map data of arbitrary size to a fixed size. It is used in a variety of applications such as data storage, data transmission, data compression, data indexing, and data encryption. Hashing is a one-way function, which means that it is easy to compute the hash value for a given input, but it is computationally infeasible to determine the input given the hash value. This makes hashing a useful technique for data security.

Debezium is a powerful platform that can be used in a variety of use cases where real-time data capture and streaming are required. Its flexibility, scalability, and extensibility make it a popular choice among organizations that need to build real-time data pipelines and microservices-based architectures.

Understanding the Phases of a Project; Focusing on Outcomes instead of Activity; Using the Cone of Uncertainty Framework

Concurrency allows a system to execute multiple tasks or processes simultaneously, which can improve performance, resource utilization, responsiveness, and scalability. However, there are potential pitfalls such as deadlocks, race conditions, synchronization overhead, debugging and testing challenges, and resource contention. To leverage concurrency effectively, it is important to design and implement concurrent systems carefully and use appropriate synchronization mechanisms and testing approaches to identify and mitigate potential issues.

Clustering in Snowflake is a way of organizing data in tables to make querying more efficient. It is based on the unique concept of micro-partitions, which is different from the static partitioning of tables used in traditional data warehouses

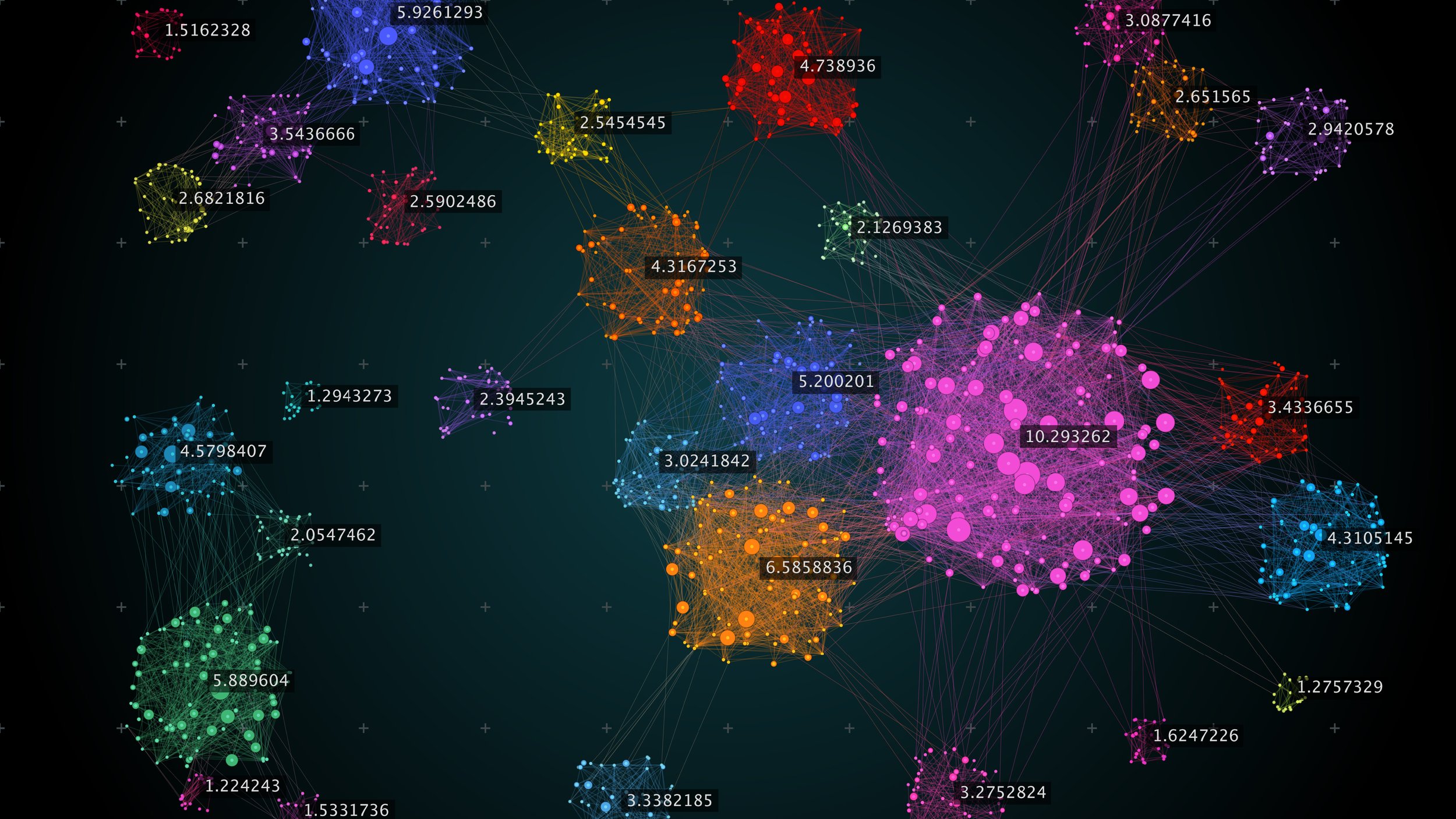

Spark optimizations are techniques used to improve the performance and efficiency of Spark applications. Key optimizations include memory management, data partitioning, caching, parallelism, resource management, and optimization libraries. These techniques enable faster and more efficient processing of large datasets, making Spark a popular choice for big data processing.

Apache Kafka is a powerful open-source streaming platform that enables businesses to manage data streams effectively. However, building enterprise-grade solutions with Kafka requires a comprehensive understanding of its key components. In this article, we will explore the four core components of Kafka and their purpose in developing a robust streaming platform.

Snowflake is a cloud-native, SQL data warehouse built to let users put all their data in one place for ease of access and analysis. Amazon Redshift boasts low maintenance costs, high speed, strong performance, and high availability.

Snowflake is an analytic data warehousewas built from the ground up for the cloud to optimize loading, processing and query performance for very large volumes of data. It features storage, compute, and global services layers that are physically separated but logically integrated.

How do you build a secure environment in AWS Cloud? There are a few good security practices and guidelines, that must be incorporated into a full, end-to-end secure design.

Why are Data lakes central to the modern data architecture?

Sample Architecture for creating a Data Pipeline. The architecture depicts the components and the data flow needed for a event-driven batch analytics system.

A security primer for Data Lakes; Data Security and Data Cataloging for data lakes

Learn about the final and crucial considerations for setting up your Data Lakes. Confirm if data lakes are the best choice and implement the right level discipline.

Understanding the science behind text analysis requires special algorithms that determine how a string field in a document is transformed into terms in an inverted index. This blog talks about analyzers which are a combinations of tokenizers, token filters, and character filters.

I will take you through my journey of overcoming obstacles by embracing hybrid Cloud environments, modern tools and technologies for digital transformation so we could reap the benefits of a solid, long-term solution.

How do you design good databases? Experienced database designers perform all the necessary design functions, in their proper sequence, leaving out nothing. There are a few good database design techniques and guidelines, that must be incorporated into a full, end-to-end database design method.

What are some of the core attributes of a distributed storage system? With a strong understanding of the fundamentals of distributed storage systems, we are able to categorize and evaluate new systems

My experiences over the last few years have made Cassandra, Elasticsearch, Snowflake, Spark and others my favorites. Learn more about the technologies that will continue to grow in 2021.

Apache Cassandra is a distributed, NoSQL Database management system (DBMS) designed for high volumes of data. Learn more about the Architecture and the derisk approach when working with Cassandra.

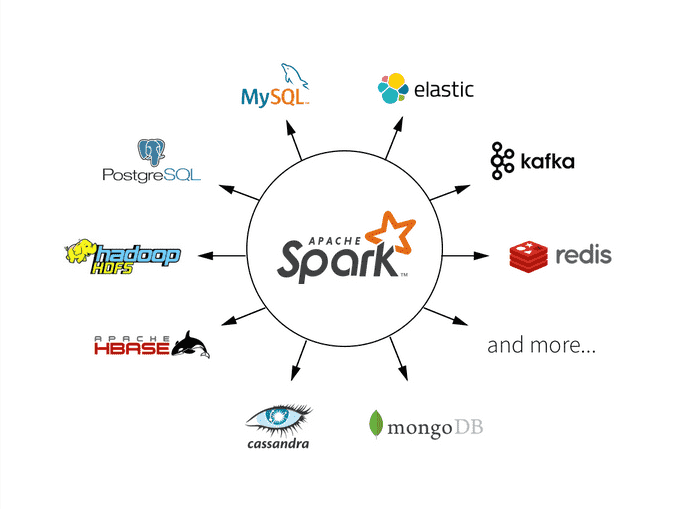

Apache Spark is a unified analytics engine for big data processing with a myriad of built-in modules for Machine Learning, Streaming, SQL, and Graph Processing. Learn about the best practices when creating Spark integration projects.

ElasticSearch is a search engine based on the Lucene library that provides a distributed, multitenant-capable full-text search engine. Learn about ElasticSearch and its best practices when using it.