Privacy By Design - A Key Aspect of a Global Data Strategy

Introduction

A Global Data Strategy centered around a Privacy by Design Methodology means that the organization's data strategy is built with privacy in mind from the start, rather than added as an afterthought. This ensures that personal data is collected, used, and protected in a way that aligns with privacy best practices and relevant laws and regulations.

Adherence to regulatory restrictions and laws on a regional basis means that the organization is aware of and complies with privacy laws and regulations in different regions where they operate. This includes understanding the differences in laws and regulations between countries and ensuring that the organization's data processing practices align with these restrictions.

A clear and transparent methodology for obtaining consumer consent for the processing of consumer data means that the organization has a process in place for obtaining explicit and informed consent from individuals before their data is processed. This process should be clear and easy to understand for consumers and should outline the purposes for which the data will be used.

Clear, transparent, and consistent data usage guidelines for internal teams to adhere to mean that the organization has established guidelines and policies for its employees on handling personal data. These guidelines should be clear and easily accessible and should provide guidance on best practices for collecting, processing, and storing data.

An auditing/maintenance process to ensure products continue to honor user rights and updates to privacy regulations means that the organization has established a process for regularly reviewing and auditing its data processing practices to ensure compliance with privacy regulations and user rights. This process should also ensure that any updates to privacy regulations are quickly incorporated into the organization's practices.

Definition of Terms

Data privacy regulations and guidelines in the United States. United States as a whole and may include federal, state, and local laws and regulations related to data privacy.

CCPA: refers to the California Consumer Privacy Act, which is a state-level privacy law that went into effect in 2020. The CCPA gives California residents more control over their personal information, including the right to know what information is being collected about them, the right to request that their information be deleted, and the right to opt out of the sale of their personal information.

CPRA: is a California state law that updates and expands the California Consumer Privacy Act, providing Californians with additional privacy rights and protections over their personal information. It introduces new requirements for businesses and establishes a new regulatory agency to enforce the law.

COPPA: refers to the Children's Online Privacy Protection Act, which is a federal law that requires website and app operators to obtain parental consent before collecting personal information from children under the age of 13.

FTC Guidelines: refers to guidelines issued by the Federal Trade Commission, which is a government agency responsible for enforcing federal consumer protection laws. The FTC issues guidelines related to data privacy and security, including guidance on best practices for protecting consumer data and complying with data privacy regulations.

Data privacy regulations and guidelines in the European Union. EU refers to the European Union as a whole and may include laws and regulations related to data privacy at both the EU and national levels.

GDPR: refers to the General Data Protection Regulation, which is an EU-wide regulation that went into effect in 2018. The GDPR provides a comprehensive framework for data protection, including guidelines for collecting, processing and storing personal data. It also gives individuals more control over their personal data, including the right to access, rectify, and erase their data.

ePrivacy: refers to the ePrivacy Regulation, which is a proposed EU-wide regulation that would update the current ePrivacy Directive. The ePrivacy Regulation would provide additional rules for the processing of electronic communications data, including data related to email, SMS, and instant messaging. It would also complement the GDPR by providing additional guidance on electronic communications data.

Privacy Principles Framework

Privacy by Design is the concept of integrating privacy protection measures into the design of products, services, and systems from the outset. By default, customer data is protected, and this becomes an integral part of the user experience, receiving the same level of importance as functionality. The principles of Privacy by Design can be applied to various aspects of information processes, including system designs, organizational priorities, project objectives, standards, protocols, and business practices. This approach ensures that privacy is not an afterthought, but a guiding force that shapes the entire information process

| PbD Methodology Steps | Privacy by Design Principles | Description |

|---|---|---|

| Identify the Need for PbD | Principle 1: Proactive, Not Reactive, Preventative, Not Remedial | Be proactive, not reactive: Focus on preventing security issues before they occur, rather than responding to them after the fact. |

| Define the Scope of PbD | Principle 2: Privacy as a Default Setting | Make security the default setting: Security should be integrated into all products and services by default, so that users do not have to take any action to protect their data. |

| Conduct a Privacy Impact Assessment (PIA) | Principle 3: Privacy Embedded into Design | Embed security into design: Security should be built into the design of products and services from the outset, rather than being added on later as an afterthought. |

| Design and Implement PbD Measures | Principle 4: Full Functionality - Positive Sum, Not Zero Sum | Ensure full functionality: Security measures should not compromise the functionality of products and services, but rather should work in tandem with them to benefit all stakeholders. |

| Maintain and Improve PbD Measures | Principle 5: End-to-End Security - Full Life Cycle Protection | Provide end-to-end security: Security should be protected throughout the entire lifecycle of products and services, not just limited to specific stages of development or use. |

| Review and Update the PbD Strategy | Principle 6: Visibility and Transparency - Keep it Open | Be transparent: Organizations should be transparent about their security practices and provide individuals with easy access to information about how their data is being used and protected. |

| Audit and Verify PbD Measures | Principle 7: Respect User Privacy - Keep it User Centric | Respect user privacy: Individuals should have control over their personal data and be able to make informed decisions about how it is used and protected. |

Privacy Compliance - Multi-Layered Approach

Legislation - Privacy laws (e.g., CCPA, GDPR, CPRA): Organizations must comply with privacy laws and regulations that protect the personal information of individuals. Examples include the California Consumer Privacy Act (CCPA), the General Data Protection Regulation (GDPR) in the European Union, and the California Privacy Rights Act (CPRA).

Regulatory guidance (e.g., FTC rules): Regulatory agencies such as the Federal Trade Commission (FTC) provide guidance on how organizations can comply with privacy laws and regulations.

Industry standards (e.g., IAB, NAI, DAA): Industry associations and groups, such as the Interactive Advertising Bureau (IAB), Network Advertising Initiative (NAI), and Digital Advertising Alliance (DAA), provide privacy guidelines and self-regulatory principles that organizations can follow.

Contractual + Ethical requirements/obligations (both clients and suppliers): Organizations must meet contractual obligations and ethical requirements with their clients and suppliers, which may include privacy and data protection requirements.

Internal policies (GPO + Business driven): Organizations should establish internal policies and procedures for privacy and data protection, which may be driven by a Global Privacy Office (GPO) or business units.

Privacy-Focused Educational Program: Organizations should provide privacy-focused education and training to their employees to promote awareness and understanding of privacy and data protection issues.

Brand reputation with consumers: Organizations must protect their brand reputation with consumers by being transparent about their privacy practices and ensuring that they respect individuals' privacy rights.

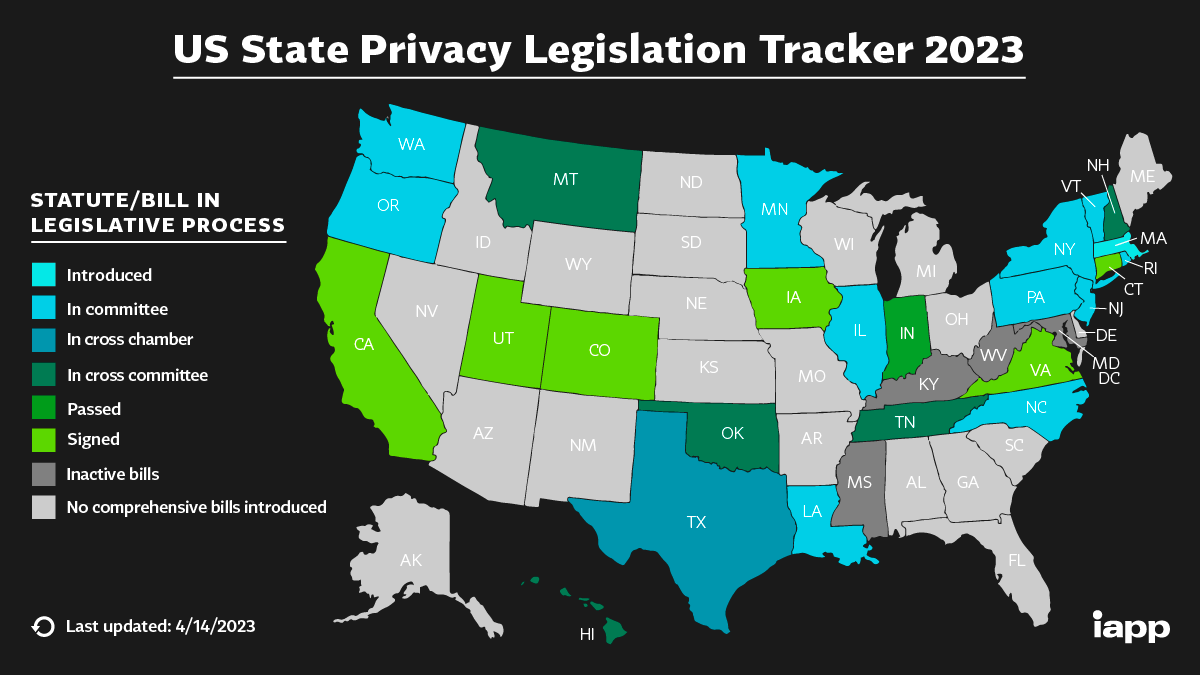

New Laws

The California Privacy Rights Act (CPRA) is a new state law that expands and strengthens the existing California Consumer Privacy Act (CCPA). The CPRA introduces new requirements for organizations that handle the personal information of California residents, such as enhanced disclosures, expanded consumer rights, and data minimization. Organizations must also conduct regular risk assessments, implement contractual obligations with service providers, and maintain records of their data processing activities to demonstrate compliance with CPRA requirements. The CPRA is intended to provide Californians with additional privacy rights and protections, and organizations must take a comprehensive and proactive approach to comply with the new law.

The Virginia Consumer Data Protection Act (CDPA) will take effect on January 1, 2023, and will apply to businesses that collect personal information of at least 100,000 consumers or derive at least 50% of their gross revenue from the sale of personal information and process the personal information of at least 25,000 consumers. The CDPA requires businesses to establish data protection policies, disclose their data collection practices, and allow consumers to request access to, correction of, and deletion of their personal information. In addition, businesses are required to obtain affirmative consent before collecting and processing certain sensitive data. The CDPA also includes specific requirements for data breach notification and imposes penalties for noncompliance.

The Colorado Privacy Act (CPA) takes effect on July 1, 2023, and applies to businesses that process the personal data of 100,000 or more consumers annually or that collect or process the personal data of 25,000 or more consumers and also derive revenue from the sale of personal data. The act requires businesses to establish data protection policies, disclose their data collection practices, and allow consumers to opt out of the sale of their personal information. Consumers can also request access to, correction of, and deletion of their personal information. Similar to other privacy laws, the CPA includes specific requirements for data breach notification and imposes penalties for noncompliance.

Under CPA, a Universal Opt-Out Mechanism is necessary for users to opt out of targeted advertising and profiling. DPAs are also required under CPA and CDPA when using personal information for targeted advertising. All three laws, including CPRA, CDPA, and CPA, have Data Minimization requirements that limit the collection, use, and retention of personal information. Furthermore, there is no longer a "cure" period, meaning companies must comply with the laws immediately, or face fines without any grace period.

Triggers for a privacy assessment

These are ten scenarios that may trigger a need for privacy assessment or review in an organization:

| Situation | Action Required |

|---|---|

| New Vendor Integration | Conduct a privacy assessment to ensure compliance with relevant privacy laws and regulations. |

| PI being shared with 3P vendor (New/Existing) | Conduct a privacy review to ensure compliance with relevant laws and regulations. |

| Creation of brand new product involving PI | Conduct a privacy assessment to ensure compliance with relevant laws and regulations. |

| PI Collection and usage in a new region | Conduct a privacy review to ensure compliance with relevant laws and regulations in that region. |

| Collection of new PI type | Conduct a privacy assessment to ensure compliance with relevant laws and regulations. |

| Linkage/Mapping of PI | Conduct a privacy review to ensure compliance with relevant laws and regulations. |

| Change of PI Flow | Conduct a privacy assessment to ensure compliance with relevant laws and regulations. |

| New Regulation | Conduct a privacy review to ensure compliance with the new requirements. |

| PI Retention Increase | Conduct a privacy assessment to ensure compliance with relevant laws and regulations. |

| Use of PI for ML purposes | Conduct a privacy review to ensure compliance with relevant laws and regulations. |

An approach to de-identify customer's personal data

Description: The customer personal data deletion use case involves the removal of personally identifiable information (PII) upon a customer's request. The PII data may exist in various systems and databases within an organization, including large fact tables, data lakes, and data warehouses.

Potential Solution: To comply with the customer's data deletion request, a separate mapping table is created to handle the deletion of PII values. This mapping table maintains traceability to the original PII through a randomly generated hashed ID.

When new data is loaded into the fact table, the PII values are replaced with the corresponding hashed ID. The hashed ID and PII value mapping is stored in the separate mapping table. When there is a request for PII deletion, only the values in the mapping table are deleted.

To further protect customer privacy, the large fact table can be periodically purged or have a data retention policy applied to completely erase PII.

This solution enables organizations to comply with customer data deletion requests while still maintaining the integrity of the original data.

To implement the above solution in SQL, we can create two tables - one for the large fact table and the other for the hashed ID mapping table.

-- Create large fact table

CREATE TABLE fact_table (

id INTEGER PRIMARY KEY,

hashed_id VARCHAR(255),

value1 VARCHAR(255),

value2 VARCHAR(255),

value3 VARCHAR(255)

);

-- Create hashed ID mapping table

CREATE TABLE hashed_id_mapping (

id INTEGER PRIMARY KEY,

hashed_id VARCHAR(255),

pii_value VARCHAR(255),

delete_date DATE

);

When new data is loaded into the fact table, we can use a hash function to generate a hashed ID and replace the PII value.

-- Insert new data into fact table

INSERT INTO fact_table (id, hashed_id, value1, value2, value3)

VALUES (1, SHA2('John Doe', 256), 'value1', 'value2', 'value3');

We can then add a new record to the hashed ID mapping table to maintain the mapping between the hashed ID and the PII value.

-- Add new record to hashed ID mapping table

INSERT INTO hashed_id_mapping (id, hashed_id, pii_value, delete_date)

VALUES (1, SHA2('John Doe', 256), 'John Doe', DATEADD(NOW(), INTERVAL 30 DAY));

To delete the PII value upon request, we can simply delete the record from the hashed ID mapping table. The hashed ID can remain in the fact table for traceability purposes.

-- Delete PII value upon request

DELETE FROM hashed_id_mapping

WHERE id = 1;

Periodically, we can delete records from the fact table based on a data retention policy.

-- Delete records from fact table based on retention policy

DELETE FROM fact_table

WHERE id IN (

SELECT id

FROM fact_table

WHERE hashed_id IN (

SELECT hashed_id

FROM hashed_id_mapping

WHERE delete_date <= NOW()

)

);

Finally, you may also benefit from the pseudocode that outlines the general steps for deidentifying a data lake:

| Load the data from the data lake into memory. |

| Identify any personally identifiable information (PII) within the data. |

| Apply a deidentification algorithm to the PII. This may involve techniques such as masking, tokenization, or encryption. |

| Replace the original PII values with the deidentified values in the data. |

| Save the updated data back to the data lake. |

Deidentification of sensitive data has several advantages

Protecting privacy: Deidentification can protect the privacy of individuals by removing or obscuring personally identifiable information (PII) from datasets.

Reducing the risk of data breaches: By removing or obscuring PII, deidentification can reduce the risk of data breaches that can result in the unauthorized access, use, or disclosure of sensitive information.

Facilitating data sharing: Deidentified data can be shared with researchers, analysts, and other parties without compromising the privacy of individuals or violating data protection laws.

Enabling data analysis: Deidentified data can be used for analysis and modeling, enabling insights and discoveries that can inform decision-making and drive innovation.

Compliance with regulations: Deidentification can help organizations comply with data protection regulations, such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA).